-

Type:

Enhancement

-

Resolution: Fixed

-

Priority:

P4

P4

-

Affects Version/s: 17, 21, 22, 23

-

Component/s: hotspot

-

b24

| Issue | Fix Version | Assignee | Priority | Status | Resolution | Resolved In Build |

|---|---|---|---|---|---|---|

| JDK-8338671 | 21.0.5 | Aleksey Shipilev | P4 | Resolved | Fixed | b04 |

```

void Method::mask_for(int bci, InterpreterOopMap* mask) {

methodHandle h_this(Thread::current(), this);

// Only GC uses the OopMapCache during thread stack root scanning

// any other uses generate an oopmap but do not save it in the cache.

if (Universe::heap()->is_gc_active()) {

method_holder()->mask_for(h_this, bci, mask);

} else {

OopMapCache::compute_one_oop_map(h_this, bci, mask);

}

return;

}

```

...but GC active is only set by `IsGCActiveMark`, which e.g. for Shenandoah is only set in `ShenandoahGCPauseMark`. The problem is that `CollectedHeap::is_gc_active` is specified to answer `true` only for STW GCs.

So when we run in concurrent phases, we just compute the oop maps and drop them on the floor after use. We should consider relaxing this check and allowing collected heap implementations to cache oop maps even in concurrent phases.

This might need adjustments in how OopMapCache does locking, mostly for lock ordering with the locks that concurrent GCs hold, see

The slowness in processing interpreter frames can be replicated with Shenandoah like this:

```

% cat ManyThreadsStacks.java

public class ManyThreadStacks {

static final int THREADS = 1024;

static final int DEPTH = 1024;

static volatile Object sink;

public static void main(String... args) {

for (int t = 0; t < DEPTH; t++) {

int ft = t;

new Thread(() -> work(1024)).start();

}

while (true) {

sink = new byte[100_000];

}

}

public static void work(int depth) {

if (depth > 0) {

work(depth - 1);

}

while (true) {

try {

Thread.sleep(100);

} catch (Exception e) {

return;

}

}

}

}

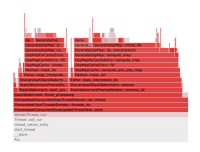

% build/linux-aarch64-server-release/images/jdk/bin/java -Xmx1g -Xms1g -XX:+UseShenandoahGC -Xlog:gc -Xint ManyThreadsStacks.java 2>&1 | grep "marking roots"

[1.259s][info][gc] GC(0) Concurrent marking roots 73.293ms

[1.350s][info][gc] GC(1) Concurrent marking roots 73.405ms

[1.441s][info][gc] GC(2) Concurrent marking roots 73.303ms

[1.532s][info][gc] GC(3) Concurrent marking roots 73.115ms

[1.622s][info][gc] GC(4) Concurrent marking roots 73.156ms

[1.813s][info][gc] GC(5) Concurrent marking roots 73.998ms

# With the patch that enables access to OopMapCache from concurrent phases:

% build/linux-aarch64-server-release/images/jdk/bin/java -Xmx1g -Xms1g -XX:+UseShenandoahGC -Xlog:gc -Xint ManyThreadsStacks.java 2>&1 | grep "marking roots"

[1.191s][info][gc] GC(0) Concurrent marking roots 6.273ms

[1.214s][info][gc] GC(1) Concurrent marking roots 6.183ms

[1.235s][info][gc] GC(2) Concurrent marking roots 6.150ms

[1.254s][info][gc] GC(3) Concurrent marking roots 6.280ms

[1.274s][info][gc] GC(4) Concurrent marking roots 6.178ms

```

- backported by

-

JDK-8338671 Allow using OopMapCache outside of STW GC phases

-

- Resolved

-

- is blocked by

-

JDK-8331573 Rename CollectedHeap::is_gc_active to be explicitly about STW GCs

-

- Resolved

-

-

JDK-8331714 Make OopMapCache installation lock-free

-

- Resolved

-

- relates to

-

JDK-8186042 Optimize OopMapCache lookup

-

- Resolved

-

-

JDK-8317466 Enable interpreter oopMapCache for concurrent GCs

-

- Closed

-

-

JDK-8334594 Generational ZGC: Deadlock after OopMap rewrites in 8331572

-

- Resolved

-

-

JDK-8317240 Promptly free OopMapEntry after fail to insert the entry to OopMapCache

-

- Resolved

-

- links to

-

Commit

openjdk/jdk/d999b81e

Commit

openjdk/jdk/d999b81e

-

Commit(master)

openjdk/jdk21u-dev/ed77abd4

Commit(master)

openjdk/jdk21u-dev/ed77abd4

-

Review(master)

openjdk/jdk21u-dev/610

Review(master)

openjdk/jdk21u-dev/610

-

Review(master)

openjdk/jdk/19229

Review(master)

openjdk/jdk/19229