-

Type:

JEP

-

Resolution: Delivered

-

Priority:

P4

P4

-

Component/s: hotspot

-

Ivan Walulya & Thomas Schatzl

-

Feature

-

Open

-

Implementation

-

-

M

-

M

-

522

Summary

Increase application throughput when using the G1 garbage collector by reducing the amount of synchronization required between application threads and GC threads.

Goals

-

Reduce the G1 garbage collector’s synchronization overhead.

-

Reduce the size of the injected code for G1’s write barriers.

-

Maintain the overall architecture of G1, with no changes to user interaction.

Non-Goals

- It is not a goal to match the throughput of the G1 garbage collector with that of any other garbage collector in the HotSpot JVM.

Motivation

The Garbage-First collector (G1), which is the default garbage collector of the HotSpot JVM, is designed to balance latency and throughput. However, achieving this balance can sometimes impact application performance compared to throughput-oriented collectors such as the Parallel and Serial collectors.

Relative to Parallel, G1 performs more of its work concurrently with the application, reducing the duration of GC pauses and thus improving latency. Unavoidably, this means that application threads must share the CPU with GC threads, and coordinate with them. This synchronization both lowers throughput and increases latency.

Description

We propose to improve both throughput and latency by reducing the amount of synchronization required between application threads and GC threads.

Background

G1 reclaims memory by copying live objects in the heap into new memory regions, making the regions previously occupied by those objects available for the allocation of new objects. References to the original objects, stored in the fields of other objects in other regions, must somehow be updated to point to their new copies.

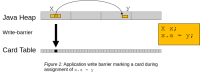

To find the references that need to be updated, G1 does not scan the entire heap, which would be inefficient. Instead, G1 keeps track of cross-region object references in a data structure called a card table, which is updated every time an object reference is stored in a field. These updates are performed by small fragments of code called write barriers, which G1 injects into the application in cooperation with the JIT. During a GC pause, G1 only needs to scan the card table to find the objects containing fields that require updating.

Scanning the card table is efficient. However, some applications frequently allocate new objects and store references in the fields of those objects. In such applications the card table may grow so large that scanning it causes G1 to exceed its pause-time goal. To avoid that, G1 optimizes the card table in the background via separate optimizer threads. For that to work, however, the optimizer threads must synchronize with application threads to avoid conflicting updates to the card table. Accordingly, the write-barrier code injected into application threads is complex and therefore slow, and so is the code that optimizes the card table.

Proposal

We propose to introduce a second card table so that optimizer and application threads can run freely. The write barriers in application threads update the first card table without any synchronization, making them simpler and faster. Meanwhile, the optimizer threads work on the second, initially empty, card table.

When G1 detects that scanning the first card table during a GC pause would likely exceed the pause-time goal, it atomically swaps the card tables. Application threads resume updating the empty, formerly second table, while optimizer threads work on the full, formerly first table without any further synchronization. G1 repeats this process as necessary to keep the amount of work on the active card table under control.

Performance

This change reduces overhead both while the application is running and during garbage collection pauses.

The main benefit comes from the reduced synchronization between application and optimizer threads. In applications that heavily modify object-reference fields, we have observed throughput gains in the range of 5–15%.

Some additional benefit comes from the write barriers being simpler and faster. For example, on x64, write barriers are reduced from around 50 instructions to just 12. Owing to this, we have observed throughput gains of up to 5% even in applications that do not heavily modify object-reference fields.

The second card table is more efficient than the auxiliary data structure that previously kept track of modified references, so garbage collection pause times also decrease slightly.

Native memory footprint

The second card table has the same capacity as the first, and uses the same amount of additional native memory. Each card table requires 0.2% of Java heap capacity, corresponding to an additional 2MB of native memory usage per 1GB of Java heap capacity.

The second card table replaces the auxiliary data structure that previously kept track of modified references. In some cases the second card table is smaller than that data structure would have been. Even in cases where the second card table is larger, however, resulting in greater usage of native memory, this should not be a significant concern. In JDK 20 and JDK 21 we removed other large data structures from G1 that in total used more than eight times the size of the second card table.

Alternatives

We previously prototyped several other approaches to improving the throughput of G1:

-

Use operating-system-dependent synchronization mechanisms to atomically swap the card table. This required operating-system-specific code or even operating-system-version-specific code, increasing code complexity extraordinarily without providing the expected benefit. Here we have avoided this problem by using generic thread-local handshakes (JEP 312).

-

Keep the existing auxiliary data structure for tracking modified references in order to decrease the amount of code change. This approach limited the degree to which write barriers could be simplified, thereby limiting the potential performance improvement.

-

Have two separate G1 garbage collector modes: One mode would work as before, while a new mode would use the simpler write barriers of the Parallel garbage collector and disable background card-table optimization. Supporting two modes of operation for G1 would add significant complexity to the code base. It would also be inconvenient for users, who would have to select the mode at JVM startup. Adoption could, furthermore, be limited since users who choose the new mode might as well just use the Parallel collector, which would still be slightly faster than G1 on pure throughput workloads.

We made other attempts to decrease synchronization between application threads and optimizer threads by modifying the write barriers. These approaches exhibited severe throughput regressions in some situations with only modest gains otherwise.

Overall, we think that the current proposal has the best trade-off between code complexity and performance.

Risks and Assumptions

This is an intrusive change to critical components of the G1 collector’s interaction with application threads. There is, therefore, a non-negligible risk of bugs that may cause failures and introduce performance regressions. To mitigate this risk, we will conduct careful code reviews and perform extensive testing.

We assume there is no need to provide additional user control over the optimization threads beyond what is already provided. The user may continue to completely disable the optimization threads via -XX:-G1UseConcRefinement or limit their number via -XX:G1ConcRefinementThreads=<number>. We assume that these two options continue to cover all necessary use cases not otherwise better handled by internal heuristics.

We assume that the throughput gains offered by this change, combined with the aforementioned native memory savings in earlier releases, justify the additional memory usage of the second card table.

- relates to

-

JDK-8253230 G1 20% slower than Parallel in JRuby rubykon benchmark

-

- Closed

-

-

JDK-8132937 G1 compares badly to Parallel GC on throughput on javac benchmark

-

- Open

-

-

JDK-8233438 Use zero_filled optimization for young gen regions when committing young regions

-

- Open

-

-

JDK-8273137 Improve skipping card enqueuing during gc if there are no remembered sets in old gen

-

- Open

-

-

JDK-8290736 Improve scalability of Merge Heap Roots/Merge Log Buffers

-

- Open

-

-

JDK-8367549 G1: Investigate impact of G1ReviseYoungLengthTask

-

- Open

-

-

JDK-8342382 Implement JEP 522: G1 GC: Improve Throughput by Reducing Synchronization

-

- Resolved

-

-

JDK-8344951 Stabilize write barrier micro-benchmarks

-

- Resolved

-

-

JDK-8166899 Deferred card marking of large objArrays generates lots of unnecessary work

-

- Closed

-

-

JDK-8062128 Dramatic difference between UseConcMarkSweepGC and UseG1GC

-

- Closed

-

-

JDK-8226197 Reduce G1’s CPU cost with simplified write post-barrier and disabling concurrent refinement

-

- Closed

-

-

JDK-8226731 Remove StoreLoad in G1 post barrier

-

- Closed

-

-

JDK-8230187 Throughput post-write barrier for G1

-

- Closed

-

-

JDK-8231734 G1DirtyCardQueueSet assumed to use 0 start for G1FreeIdSet

-

- Closed

-

-

JDK-8233990 G1 card refinement: joining, prefetching

-

- Closed

-

-

JDK-8256282 Investigate putting card table base into G1 thread local data

-

- Closed

-

-

JDK-8286586 G1: Return memory in Dirty Card buffer allocator to OS

-

- Closed

-

-

JDK-8293616 Concurrent refinement by mutators only processes one buffer

-

- Closed

-

-

JDK-8375531 G1: Add asserts that card table base is not embedded

-

- Closed

-